AAP/Jono Searle

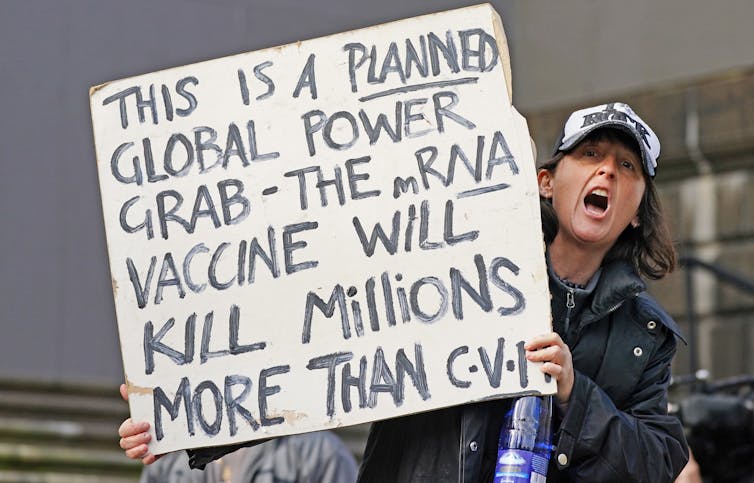

Denis Muller, The University of MelbourneClive Palmer’s United Australia Party advertisements inferentially objecting to COVID-19 lockdowns demonstrate one more way in which the freedoms essential to a democracy can be abused to the detriment of the public interest.

Democracies protect freedom of speech, especially political speech, because without it democracy cannot work. When speech is harmful, however, laws and ethical conventions exist to curb it.

The laws regulating political advertising are minimal.

Section 329 of the Commonwealth Electoral Act is confined to the issue of whether a publication is likely to mislead or deceive an elector in relation to the casting of a vote. It has nothing to say about truth in political advertising for the good reason that defining truth in that context would be highly subjective and therefore oppressive.

Sections 52 and 53 of the Trade Practices Act make it an offence for corporations to engage in misleading or deceptive conduct, or to make false or misleading representations. The act has nothing to say about political advertising.

Ad Standards, the industry self-regulator, has a code of ethics that enjoins advertisers not to engage in misleading or deceptive conduct. It is a general rule that applies to all advertising, political or not.

The Palmer ads do not violate any of these provisions.

Read more:

News Corp walks a delicate line on COVID politics

So where does that leave media organisations that receive an approach from the likes of Palmer to publish advertisements the terms of which are not false, misleading or deceptive, but which are clearly designed to undermine public support for public health measures such as lockdowns?

It leaves them having to decide whether to exercise an ethical prerogative.

Short of a legal requirement to do so – say, in settlement of a law suit – no media organisation is obliged to publish an advertisement. It is in almost all cases an ethical decision.

Naturally, freedom of speech imposes a heavy ethical burden to publish, but it is not the only consideration. John Stuart Mill’s harm principle becomes relevant. That principle says the prevention of harm to others is a legitimate constraint on individual freedom.

Undermining public support for public health measures is obviously harmful and against the public interest. Media organisations are entitled to make decisions on ethical bases like this. An example from relatively ancient history will illustrate the point.

In the late 1970s, 4 Corners ran a program alleging that the Utah Development Corporation’s mining activities in Queensland were causing environmental damage. A few days after the program was broadcast, The Sydney Morning Herald received a full-page advertisement from Utah not only repudiating what 4 Corners had said but attacking the professional integrity of the journalists who made the program.

I was chief of staff of the Herald that day and the advertisement was referred to me, partly because it contained the seeds of what might have been a news story and partly because there were concerns it might be defamatory.

I referred it to the executive assistant to the editor, David Bowman, who refused to publish it.

He objected to it not only on legal grounds but on ethical grounds, because it impugned the integrity of the journalists in circumstances where they would have no opportunity to respond. In his view, this was unfair.

A short while later, the advertising people came back saying Utah had offered to indemnify the Herald against any legal damages or costs arising from publication of the advertisement.

Bowman held to his ethical objection and was supported by the general manager, R. P. Falkingham, who said: “You don’t publish something just because a man with a lot of money stands behind you.”

The advertisement did not run, not because of the legal risks but because it would have breached the ethical value of fairness.

Palmer’s ads – which say lockdowns are bad for mental health, bad for jobs and bad for the economy – contain truisms. There is nothing false or misleading about them. But they clearly seek to exploit public resentment about lockdowns for political gain.

The clear intention is to stir up opposition and make the public health orders harder to enforce.

Read more:

Alarmist reporting on COVID-19 will only heighten people’s anxieties and drive vaccine hesitancy

We live in an age where there are not only high levels of public anxiety, but also a great deal of confusion about who to believe on matters such as climate change and the pandemic. It is against the public interest to add gratuitously to that confusion, and harmful to the public welfare to undermine health orders.

These are grounds for rejecting his advertisements.

Nine Entertainment, which publishes The Age, The Sydney Morning Herald and The Australian Financial Review, has rejected Palmer ads that contain misinformation about the pandemic, including about vaccines. Clearly such ads violate the rules against misleading and deceptive content.

But the ads opposing lockdowns on economic or health grounds were initially accepted by Nine, and are still running in News Corporation.

The question now is whether media organisations are willing to make decisions based on ethical considerations that are wider than the narrow standard of deception.![]()

Denis Muller, Senior Research Fellow, Centre for Advancing Journalism, The University of Melbourne

This article is republished from The Conversation under a Creative Commons license. Read the original article.

You must be logged in to post a comment.